Can a machine be conscious?

By Sadaputa Dasa

SADAPUTA DASA studied at the State University of New York and Syracuse University and later received a National Science Fellowship. He went on to complete his Ph.D. in mathematics at Cornell, specializing in probability theory and statistical mechanics

Science fiction writers often try to solve the problems of old age and death by taking advantage of the idea that a human being is essentially a complex machine. In a typical scene, doctors and technicians scan the head of the dying Samuel Jones with a “cerebroscope,” a highly sensitive instrument that records in full detail the synaptic connections of the neurons in his brain. A computer then systematically transforms this information into a computer program that faithfully simulates that brain’s particular pattern of internal activity.

When this program is run on a suitable computer, the actual personality of Mr. Jones seems to come to life through the medium of the machine. “I’ve escaped death!” the computer exults through its electronic phone me generator. Scanning about the room with stereoscopically mounted TV cameras, the computerized “Mr. Jones” appears somewhat disoriented in his new embodiment. But when interviewed by old friends, “he” displays Mr. Jones’s personal traits in complete detail. In the story, Mr. Jones lives again in the form of the computer. Now his only problem is figuring out how to avoid being erased from the computer’s memory.

Although this story may seem fantastic, some of the most influential thinkers in the world of modern science take very seriously the basic principles behind it. In fact, researchers in the life sciences now almost universally assume that a living being is nothing more than a highly complex machine built from molecular components. In the fields of philosophy and psychology, this assumption leads to the inevitable conclusion that the mind involves nothing more than the biophysical functioning of the brain. According to this viewpoint, we can define in entirely mechanistic terms the words we normally apply to human personality—words like consciousness, perception, meaning, purpose, and intelligence.

Along with this line of thinking have always gone idle speculations about the construction of machines that can exhibit these traits of personality. But now things have gone beyond mere speculation. The advent of modern electronic computers has given us a new field of scientific investigation dedicated to actually building such machines. This is the field of artificial intelligence research, or “cognitive engineering,” in which scientists proceed on the assumption that digital computers of sufficient speed and complexity can in fact produce all aspects of conscious personality. Thus we learn in the 1979 M.I.T. college catalogue that cognitive engineering involves an approach to the subjects of mind and intelligence which is “quite different from that of philosophers and psychologists, in that the cognitive engineer tries to produce intelligence.”

In this article we shall examine the question of whether it is possible for a machine to possess a conscious self that perceives itself as seer and doer. Our thesis will be that while computers may in principle generate complex sequences of behavior comparable to those produced by human beings, computers cannot possess conscious awareness without the intervention of principles of nature higher than those known to modern science. Ironically, we can base strong arguments in support of this thesis on some of the very concepts that form the foundation of artificial intelligence research. As far as computers are concerned, the most reasonable inference we can draw from these arguments is that computers cannot be conscious. When applied to the machine of the human brain, these arguments support an alternative, nonmechanistic understanding of the conscious self.

To begin, let us raise some questions about a hypothetical computer possessing intelligence and conscious self-awareness on a human level. This computer need not duplicate the mind of a particular human being, such as our Mr. Jones, but must simply experience an awareness of thoughts, feelings, and sensory perceptions comparable to our own.

First, let us briefly examine the internal organization of our sentient computer. Since it belongs to the species of digital computers, it consists of an information storehouse, or memory, an apparatus called the central processing unit (CPU), and various devices for exchanging information with the environment.

The memory is simply a passive medium used to record large amounts of information in the form of numbers. We can visualize a typical computer memory as a series of labeled boxes, each of which can store a number. Some of these boxes normally contain numerically coded instructions specifying the computer’s program of activity. Others contain data of various kinds, and still others store the intermediate steps of calculations. These numbers can be represented physically in the memory as patterns of charges on microminiature capacitors, patterns of magnetization on small magnets, or in many other ways.

The CPU performs all the computer’s active operations, which consist of a fixed number of simple operations of symbol manipulation. These operations typically involve the following steps: First, from a specified location (or “address”) in the memory, the CPU obtains a coded instruction identifying the operation to be performed. According to this instruction, the CPU may obtain additional data from the memory. Then the CPU performs the operation itself. This may involve input (reading a number into the memory from an external device) or output (transmitting a number from the memory to an external device). Or the operation may involve transforming a number according to some simple rule, or shifting a number from one memory location to another. In any case, the final step of the operation will always involve the selection of a memory address where the next coded instruction is to be sought.

A computer’s activity consists of nothing more than steps of this kind, performed one after another. The instruction codes stored in the passive memory specify the operations the CPU is to execute. The function of the CPU is simply to carry them out sequentially. The CPU’s physical construction, like that of the memory, may include many kinds of components, ranging from microminiature semiconducter junctions to electromechanical relays. It is only the logical arrangement of these components, and not their particular physical constitution, that determines the functioning of the CPU.

Church’s Thesis

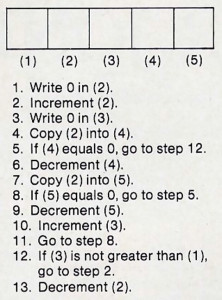

We can most easily understand the operation of a computer by considering a simple example. Figure 1 illustrates a program of computer instructions for calculating the square root of a number.’ The thirteen numbered statements correspond to the list of coded instructions stored in the computer’s memory. (Here, for clarity’s sake, we have written them out in English.) The five boxes correspond to areas in the memory that store data and intermediate computational steps. To simulate the operation of the computer, place a number, such as 9, in box (1). Then simply follow the instructions. When you have completed the last instruction, the square root of your original number will be in box (2). In a computer, each of these instructions would be carried out by the CPU. They illustrate the kind of elementary operations used by present-day computers (although the operations do not correspond exactly to those of any particular computer).

The method of finding a square root given in our example may seem cumbersome and obscure, but it is typical of how computers operate. In fact, the practical applicability of computers rests on the observation that every fixed scheme of computation ever formulated can be reduced to a list of simple operations like the one in our example. This observation, first made by several mathematicians in the 1930s and ’40s, is commonly known as Church’s thesis. It implies that, in principle, any scheme of symbol manipulation we can precisely define can be carried out by a modern digital computer.

At this point, let us consider our hypothetical sentient computer. According to the exponents of artificial intelligence, the intricate behavior characteristic of a human being is nothing more than a highly complex scheme of symbol manipulation. Using Church’s thesis, we can break down this scheme into a program of instructions comparable to our example in the Figure. The only difference is that this program will be exceedingly long and complex—it may run to millions of steps. Of course, up till now no one has even come close to actually producing a formal symbolic description of human behavior. But for the sake of argument let’s suppose such a description could be written and expressed as a computer program.

Now, assuming a computer is executing such a highly complex program, let us see what we can understand about the computer’s possible states of consciousness. When executing the program, the computer’s CPU will be carrying out only one instruction at any given time, and the millions of instructions comprising the rest of the program will exist only as an inactive record in the computer’s memory. Now, intuitively it seems doubtful that a mere inactive record could have anything to do with consciousness. Where, then, does, the computer’s consciousness reside? At any given moment the CPU is simply performing some elementary operation, such as “Copy the number in box (1687002) into box (9994563).” In what way can we correlate this activity with the conscious perception of thoughts and feelings?

The researchers of artificial intelligence have an answer to this question, which they base on the idea of levels of organization in a computer program. We shall take a few paragraphs here to briefly explain and investigate this answer. First we shall need to know what is meant by “levels of organization.” Therefore let us once again consider the simple computer program of above Figure. Then we shall apply the concept of levels of organization to the program of our “sentient” computer and see what light this concept can shed on the relation between consciousness and the computer’s internal physical states.

Levels of Organization

Although the square-root program of Figure 1 may appear to be a formless list of instructions, it actually possesses a definite structure, which is outlined in Figure 2 below. This structure consists of four levels of organization. On the highest level, the function of the program is described in a single sentence that uses the symbol square root. On the next level, the meaning of this symbol is defined by a description of the method the program uses to find square roots. This description makes use of the symbol squared, which is similarly defined on the next lower level in terms of another symbol, sum. Finally, the symbol sum defined on the lowest level in terms of the combination of elementary operations actually used to compute sums in the program. Although for the sake of clarity we have used English sentences in Figure 2, the description on each level would normally use only symbols for elementary operations, or higher-order symbols defined on the next level down.

These graded symbolic descriptions actually define the program, in the sense that if we begin with level 1 and expand each higher-order symbol in terms of its definition on a lower level, we will wind up writing the list of elementary operations in Figure 1. The descriptions are useful in that they provide an intelligible account of what happens in the program. Thus on one level we can say that numbers are being squared, on another level that they are being added, and on yet another that they are being incremented and decremented. But the levels of organization of the program are only abstract properties of the list of operations given in Figure 1. When a computer executes this program, these levels do not exist in any real sense, for the computer actually performs only the elementary operations in the list.

In fact, we can go further and point out that even this last statement is not strictly true, because what we call “the elementary operations” are themselves symbols, such as Increment (3), that refer to abstract properties of the computer’s underlying machinery. When a computer operates, all that really happens is that matter and energy undergo certain transformations according to a pattern determined by the computer’s physical structure.

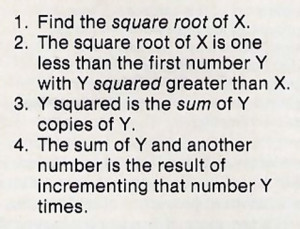

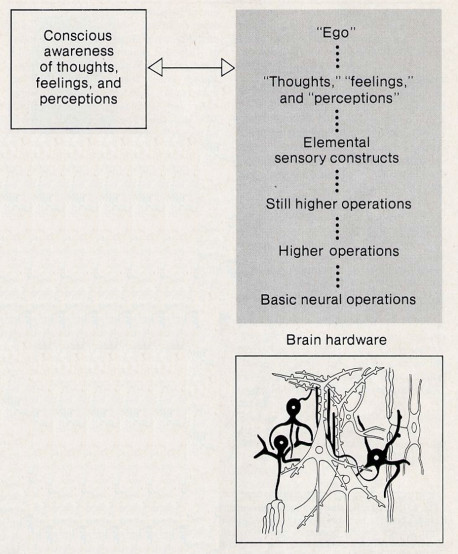

In general, any computer program that performs some complex task can be resolved into a hierarchy of levels of description similar to the one given above. Researchers in artificial intelligence generally visualize their projected “intelligent” or “sentient” programs in terms of a hierarchy such as the following: On the bottom level they propose to describe the program in terms of elementary operations. Then come several successive levels involving mathematical procedures of greater and greater intricacy and sophistication. After this comes a level in which they hope to define symbols that refer to basic constituents of thoughts, feelings, and sensory perceptions. Next comes a series of levels involving more and more sophisticated mental features, culminating in the level of the ego, or self.

Here, then, is how artificial intelligence researchers understand the relation between computer operations and consciousness: Consciousness is associated with a “sentient” program’s higher levels of operation—levels on which symbolic transformations take place that directly correspond to higher sensory processes and the transformations of thoughts. On the other hand, the lower levels are not associated with consciousness. Their structure can be changed without affecting the consciousness of the computer, as long as the higher level symbols are still given equivalent definitions. Referring again to our square-root program, we see that this idea is confirmed by the fact that the process of finding a square root given on level 2 in Figure 2 will remain esentially the same even if we define the operation of squaring on level 3 in some different but equivalent way.

If we were to adopt a strictly behavioristic use of the word consciousness, then this understanding of computerized consciousness might be satisfactory—granting, of course, that someone could indeed create a program with the required higher-order organization. Using such a criterion, we would designate certain patterns of behavior as conscious and others as not. Generally, a sequence of behavioral events would have to be quite long to qualify as “conscious.” For example, a long speech may exhibit certain complex features that identify it as “conscious,” but none of the words or short phrases that make it up would be long enough to display such features. Using such a criterion, one might want to designate a certain sequence of computer operations as “conscious” because it possesses certain abstract higher-order properties. Then one might analyze the overall behavior of the computer as “conscious” in terms of these properties, whereas any single elementary operation would be too short to qualify.

Defining Consciousness

We are interested, however, not in categorizing patterns of behavior as conscious or unconscious but rather in understanding the actual subjective experience of conscious awareness. To clearly distinguish this conception of consciousness from the behavioral one, we shall briefly pause here to describe it and establish its status as a subject of serious inquiry. By consciousness we mean the awareness of thoughts and sensations that we directly perceive and know that we perceive. Since other persons are similar to us, it is natural to suppose that they are similarly conscious. If this is accepted, then it follows that consciousness is an objectively existing feature of reality that tends to be associated with certain material structures, such as the bodies of living human beings.

Now, when a common person hears that a computer can be conscious, he naturally tends to interpret this statement in the sense we have just described. Thus he will imagine that a computer can have subjective, conscious experiences similar to his own. Certainly this is the idea behind such stories as the one with which we began this piece. One imagines the computerized “Mr. Jones,” as he looks about the room through the computer’s TV cameras, actually feeling astonishment at his strange transformation.

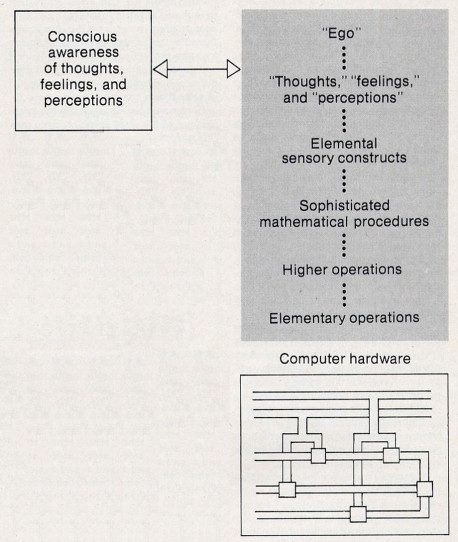

If the computerized Mr. Jones could indeed have such a subjective experience, then we would face the situation depicted in Figure 3 below. On the one hand, the conscious experience of the computer would exist—its subjective experience of colors, sounds, thoughts, and feelings would be an actual reality. On the other hand, the physical structures of the computer would exist. However, we cannot directly correlate consciousness with the actual physical processes of the computer, nor can we relate consciousness to the execution of individual elementary operations, such as those in Figure 1. According to the artificial-intelligence researchers, consciousness should correspond to higher-order abstract properties of the computer’s physical states—properties described by symbols such as thought and feeling, which stand at the top of a lofty pyramid of abstract definitions. Indeed, these abstract properties are the only conceivable features of our sentient computer that could have any direct correlation with the contents of consciousness.

Since consciousness is real, however, and these abstract properties are not, we can conclude only that something must exist in nature that can somehow “read” these properties from the computer’s physical states. This entity is represented in Figure 3 by the arrow connecting the real contents of consciousness with higher levels in the hierarchy of abstract symbolic descriptions of the sentient computer. The entity must have the following characteristics:

(1) It must possess sufficient powers of discrimination to recognize certain highly abstract patterns of organization in arrangements of matter.

(2) It must be able to establish a link between consciousness and such arrangements of matter. In particular, it must modify the contents of conscious experience in accordance with the changes these abstract properties undergo as time passes and the arrangements of matter are transformed.

There is clearly no place for an entity of this kind in our current picture of what is going on in a computer. Indeed, we can conclude only that this entity must correspond to a feature of nature completely unknown to modern science. This, then, is the conclusion forced upon us if we assume that a computer can be conscious. Of course, we can easily avoid this conclusion by supposing that no computer will ever be conscious, and this may indeed be the case. Aside from computers, however, what can we say about the relation between consciousness and the physical body in a human being? On one hand we know human beings possess consciousness, and on the other modern science teaches us that the human body is an extremely complex machine composed of molecular components. Can we arrive at an understanding of human consciousness that does not require the introduction of an entity of the kind described by statements (1) and (2)?

Ironically, if we try to base our understanding on modern scientific theory, then the answer is no. The reason is that all modern scientific attempts to understand human consciousness depend, directly or indirectly, on an analogy between the human brain and a computer. In fact, the scientific model for human consciousness is machine consciousness!

The Mechanical Brain

Modern scientists regard the brain as the seat of consciousness. They understand the brain to consist of many different kinds of cells, each a molecular machine. Of these, the nerve cells, or neurons, are known to exhibit electrochemical activities roughly analogous to those of the logical switching elements used in computer circuitry. Although scientists at present understand the brain’s operation only vaguely, they generally conjecture that these neurons form an information-processing network equivalent to a computer’s.

This conjecture naturally leads to the picture of the brain shown in Figure 4. Here thoughts, sensations, and feelings must correspond to higher levels of brain activity, which resemble the higher organizational levels of a complex computer program. Just as the higher levels of such a program are abstract, these higher levels of brain activity must also be abstract. They can have no actual existence, for all that actually happens in the brain is that certain physical processes take place, such as the pumping of sodium ions through neural cell walls. If we try to account for the existence of human consciousness in the context of this picture of the brain, we must conclude (by the same reasoning as before) that some entity described by statements (1) and (2) must exist to account for the connection between consciousness and abstract properties of brain states.

Furthermore, if we closely examine the current scientific world view, we can see that its conception of the brain as a computer does not depend merely on some superficial details of our understanding of the brain. Rather, on a deeper level, the conception follows necessarily from a mechanistic view of the world. Mechanistic explanations of phenomena are, by definition, based on systems of calculation. By Church’s thesis, all systems of calculation can in principle be represented in terms of computer operations. In effect, all explanations of phenomena in the current scientific world view can be expressed in terms of either computer operations or some equivalent symbolic scheme.

This implies that all attempts to describe human consciousness within the basic framework of modern science must lead to the same problems we have encountered in our analysis of machine consciousness. To account for consciousness, we shall inevitably require some entity like the one described in statements (1) and (2). Yet in the present theoretical system of science we find nothing, either in the brain or in a digital computer, that corresponds to this entity. Indeed, the present theoretical system could never provide for such an entity, for any mechanistic addition to the current picture of, say, the brain would simply constitute another part of that mechanistic system, and the need for an entity satisfying (1) and (2) would still arise.

Clearly, then, we must revise the basic theoretical approach of modern science if we are adequately to account for the nature of conscious beings. If we cannot do this in mechanistic terms, then we must adopt some other mode of scientific explanation. This brings us to the question of just what constitutes a scientific explanation.

A Nonmechanistic Explanation

Any theory intended to explain a phenomenon must make use of a variety of descriptive terms. We may define some of these terms by combining other terms of the theory, but there must inevitably be some terms, called primitive or fundamental, that we cannot so define. In a mechanistic theory, all the primitive terms correspond to numbers or arrangements of numbers, and scientists at present generally try to cast all their theories into this form. But a theory does not have to be mechanistic to qualify as scientific. It is perfectly valid to adopt the view that a theoretical explanation is scientific if it is logically consistent and if it enables us to deal practically with the phenomenon in question and enlarge our knowledge of it through direct experience. Such a scientific explanation may contain primitive terms that cannot be made to correspond to arrangements of numbers.

In our remaining space, we shall outline an alternative approach to the understanding of consciousness—an approach that is scientific in the sense we have described, but that is not mechanistic. Known as sanatana-dharma, this approach is expounded in India’s ancient Vedic literatures, such as Bhagavad-gita and Srimad-Bhagavatam. We shall give a short description of sanatana-dharma and show how it satisfactorily accounts for the connection between consciousness and mechanism. This account is, in fact, based on the kind of entities described in statements (1) and (2), and sanatana-dharma very clearly and precisely describes the nature of these entities, Finally, we shall briefly indicate how this system of thought can enlarge our understanding of consciousness by opening up new realms of practical experience.

By accepting conscious personality as the irreducible basis of reality, sanatana-dharma departs radically from the mechanistic viewpoint. For those who subscribe to this viewpoint, all descriptions of reality ultimately boil down to combinations of simple, numerically representable entities, such as the particles and fields of physics. Sanatana-dharma, on the other hand, teaches that the ultimate foundation of reality is an Absolute Personality, who can be referred to by many personal names, such as Krsna and Govinda. This primordial person fully possesses consciousness, senses, intelligence, will, and all other personal faculties. According to sanatana-dharma, all of these attributes are absolute, and it is not possible to reduce them to patterns of transformation of some impersonal substrate. Rather, all phenomena, both personal and impersonal, are manifestations of the energy of the Supreme Person, and we cannot fully understand these phenomena without referring to this original source.

The Supreme Person has two basic energies, the internal energy and the external energy. The external energy includes what is commonly known as matter and energy. It is the basis for all the forms and phenomena we perceive through our bodily senses, but it is insentient.

The internal energy, on the other hand, includes innumerable sentient beings called atmas. Each atma is conscious and possesses all the attributes of a person, including senses, mind, and intelligence. These attributes are inherent features of the atma, and they are of the same irreducible nature as the corresponding attributes of the Supreme Person. The atmas are atomic, individual personalities who cannot lose their identities, either through amalgamation into a larger whole or by division into parts.

Sanatana-dharma teaches that a living organism consists of an atma in association with a physical body composed of the external energy. Bhagavad-gita describes the physical body as a machine, or yantra, and the atma as a passenger riding in this machine. When the atma is embodied, his natural senses are linked up with the physical information-processing system of the body, and thus he perceives the world through the bodily senses. The atma is the actual conscious self of the living being, and the body is simply an insentient vehicle-like mechanism.

If we refer back to our arguments involving machine consciousness, we can see that in the body the atma plays the role specified by statements (1) and (2). The atma is inherently conscious, and he possesses the sensory faculties and intelligence needed to interpret abstract properties of complex brain states. In fact, if we examine statements (1) and (2) we can see that they are not merely satisfied by the atma; they actually call for some similar kind of sentient, intelligent entity.

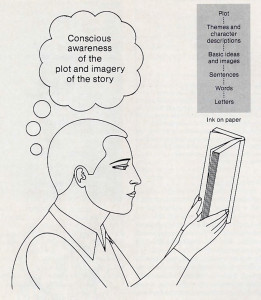

We can better understand the position of the atma as the conscious perceiver of the body by considering what happens when a person reads a book. When a person reads, he becomes aware of various thoughts and ideas corresponding to higher-order abstract properties of the arrangement of ink on the pages. Yet none of these abstract properties actually exists in the book itself, nor would we imagine that the book is conscious of what it records. To establish a correlation between the book on the one hand and conscious awareness of its contents on the other, there must be a conscious person with intelligence and senses who can read the book. Similarly, for conscious awareness to be associated with the abstract properties of states of a machine, there must be some sentient entity to read these states.

At this point one might object that if we try to explain a conscious person by positing the existence of another conscious person within his body, then we have actually explained nothing at all. One can then ask how the consciousness of this person is to be explained, and this leads to an infinite regress.

In response, we point out that this objection presupposes that an explanation of consciousness must be mechanistic. But our arguments about machine consciousness actually boil down to the observation that conscious personality cannot be explained mechanistically. An infinite regress of this kind is in fact unavoidable unless we either give up the effort to understand consciousness or posit the existence of a sentient entity that cannot be reduced to a combination of insentient parts. Sanatana-dharma regards conscious personality as fundamental and irreducible, and thus the “infinite regress” stops with the atma.

The real value of the concept of the atma as an explanation of consciousness is that it leads directly to further avenues of study and exploration. The very idea that the conscious self possesses its own inherent senses suggests that these senses should be able to function independently of the physical apparatus of the body. In fact, according to sanatana-dharma the natural senses of the atma are indeed not limited to interpreting the physical states of the material brain. The atma can attain much higher levels of perception, and sanatana-dharma primarily deals with effective means whereby a person can realize these capacities in practice.

The Science of Consciousness

Since neither the Supreme Person nor the individual atmas are combinations of material elements, it is not possible to scrutinize them directly through the material sensory apparatus. On the basis of material sensory information, we can only infer their existence by indirect arguments, such as the ones presented in this article. According to sanatana-dharma, however, we can directly observe and understand both the Supreme Person and the atmas by taking advantage of the natural sensory faculties of the atma. Thus sanatana-dharma provides the basis for a true science of consciousness.

Since this science deals with the full potentialities of the atma, it necessarily ranges far beyond the realm of mechanistic thinking. When the atma is restricted to the physically embodied state, it can participate in personal activities only through the medium of machines, such as the brain, that generate behavior by the concatenation of impersonal operations. In this stultifying situation, the atma cannot manifest his full potential.

But the atma can achieve a higher state of activity, in which it participates directly in a relation of loving reciprocation with the Supreme Person, Krsna. Since both the atma and Krsna are by nature sentient and personal, this relationship involves the full use of all the faculties of perception, thought, feeling, and action. In fact, the direct reciprocal exchange between the atma and Krsna defines the ultimate function and meaning of conscious personality, just as the interaction of an electron with an electric field might be said to define the ultimate meaning of electric charge. Sanatana-dharma teaches that the actual nature of consciousness can be understood by the atma. only on this level of conscious activity.

Thus, sanatana-dharma provides us with an account of the nature of the conscious being that takes us far beyond the conceptions of the mechanistic world view. While supporting the idea that the body is a machine, this account maintains that the essence of conscious personality is to be found in an entity that interacts with this machine but is wholly distinct from it. Furthermore, one can know the true nature of this entity only in an absolute context completely transcending the domain of machines.

We have argued that the strictly mechanistic approach to life cannot satisfactorily explain consciousness. If we are to progress in this area, we clearly need some radically different approach, and we have briefly indicated how sanatana-dharma provides such an alternative. Sanatana-dharma explains the relationship between consciousness and machines by boldly positing that conscious personality is irreducible. It then goes on to elucidate the fundamental meaning of personal existence by opening up a higher realm of conscious activity—a realm that can be explored by direct experience. In contrast, the mechanistic world view can at best provide us with the sterile, behavioristic caricature of conscious personality epitomized by the computerized Mr. Jones.

Notes

In actual computer applications, much more sophisticated methods of calculating square roots would be used. The method presented in Figure 1 is intended to provide a simple example of the nature of computer programs

For the sake of clarity, let us briefly indicate why this is so. Suppose one could describe a model of a sentient entity by means of a computer program. Then a certain level of organization of the program would correspond to the elementary constituents of the model. For example, in a quantum mechanical model these might be quantum wave functions. The level of the program corresponding to “thoughts” and “feelings” would be much higher than this level. Hence we conclude that this “cognitive” level would not in any sense exist in the actual system being modeled. It would correspond only to abstract properties of the states of this system, and thus an entity of the kind described in (1) and (2) would be needed to establish the association between the system and the contents of consciousness.

Leave a Reply